A Better Model of Measure for Learning and Development

To grow any measure of success, a measure needs to be established—as can be applied to learning and development and leadership performance alike.

Failure to track and measure changes in leadership performance increases the probability that organizations won’t take improvement initiatives seriously, according to a 2014 McKinsey & Company article, “Why Leadership Development Programs Fail” by Pierre Gurdjian.

This observation highlights one of four common mistakes organizations make in getting the most from their learning and development programs. “We frequently find that organizations pay lip service to the importance of developing leadership skills, but have no evidence to quantify the value of their investment,” writes Gudjian.

Squaring Up Learning Development

When it comes to measuring learning development projects, our firm consistently applies the Kirkpatrick Model or four-levels of training evaluation (Kirkpatrick, D. L. (1994). This well-known method is useful for analyzing and evaluating the results of any learning and development program. The four evaluation levels below provide a comprehensive and meaningful overview of the results:

- Level One (Reaction): How participants felt about the training or learning experience;

- Level Two (Learning): Measurement of the increase in knowledge before and after the program;

- Level Three (Behaviour): Extent of learning applied back on the job; and

- Level Four (Results): Effect on the business or environment by the participants.

Of Good Starts and Better Data

A comprehensive picture emerges from the data aggregated from all four levels, providing various insights into the impact of the training program as a whole. Level One is just the starting point and often where most measurement ends. As time and resources allow, measurement should continue through to Level Four to derive maximum benefit from the learning and development investment.

Here is what we typically do:

Level One: Reaction

This level provides a straightforward evaluation of how participants react to the learning experience simply by asking questions to understand their feelings. We apply this evaluation in most workshops and refer to it as a “smile sheet.”

Level Two: Learning

This step occurs at the end of a learning and development program where participants and their managers are asked to assess the participants’ level of knowledge and skills in the key areas targeted by the program. In a recent emerging leadership development program that we facilitated, participants’ managers identified strategic thinking, delegation and the ability to provide feedback as the skills that improved the most.

Level Three: Behaviour

Our approach at this level is to survey program participants and their managers about their perception of changes in behaviour that they attribute to the program. We use a mini-feedback survey—essentially a simpler and shorter form of a 360 degree assessment—to determine behaviour change. People who work closely with the participants (manager, peers, direct reports) are asked to provide feedback that shows the progress each participant had made toward achieving the behaviours targeted for improvement.

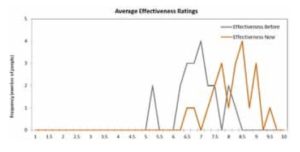

The adjoining graph is an actual example of how a program cohort performed. Participants observers were asked to rate the participant’s effectiveness by providing “before and after” scores at the close of the program. In this instance, the “after” scores (in orange) reflect overall improvement.

Level Four: Results

In this step we evaluate the extent to which participants and their managers believe that program participation has impacted predetermined performance benchmarks back on the job.

This real-life example shows that both managers and the participants felt that “employee engagement” and “manager/peer relationships” were impacted the most as a result of the program.

Make Metrics Matter More

A comprehensive set of metrics such as those provided by the Kirkpatrick Model yield valuable data for HR professionals either to demonstrate the contribution of learning and development investment to the business or to support changes in their training strategies.

“Understanding the impact of a learning experience is critical for organizations that are creating a culture of continuous improvement,” said John Horn, manager, learning and development, human resources with Vancity Credit Union. “What I appreciate about the work of KWELA with our Community Investment team is that their use of a known model aligns with our team’s approach without relying on the availability of our team to drive the process. It is both convenient and strategic when external learning partners evaluate their impact with known approaches that fit into an organization’s overall learning strategy.”

For managers, such training evaluation is particularly valuable for providing insights into individual team member’s development and further learning needs. In this light, measurement is a logical way to close the loop on any learning and development strategy and is well worth the effort.

Nic Tsangarakis is co-founder and principal of Kwela Leadership & Talent Management.